Cleaning up a bad GPS log file

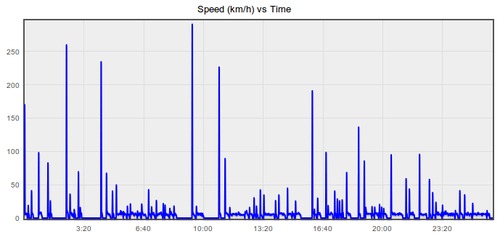

On a run the other day, my GPS logger (an AMOD AGL3080) recorded a really noisy signal and the resulting trace was inaccurate for the first 10 minutes. I have no idea why it did this, the second 10 minutes were fine.

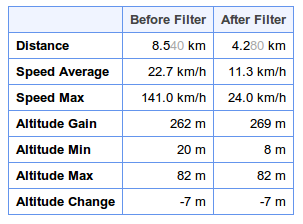

The end result was a log which was almost unusable, the distance recorded was twice what it should be and the average speed was way off.

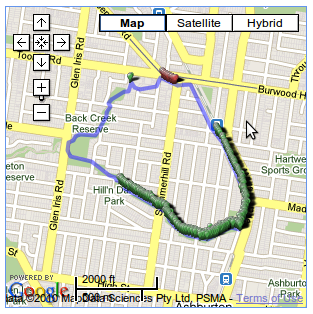

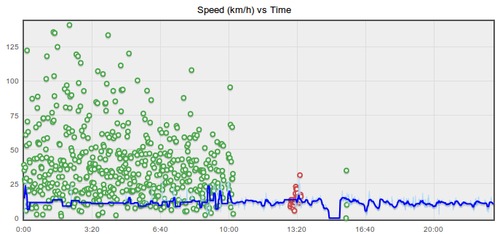

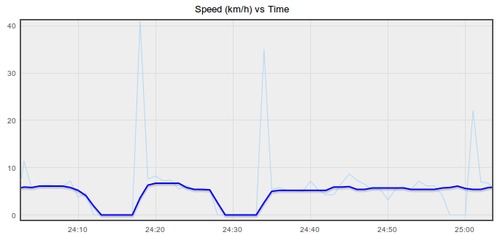

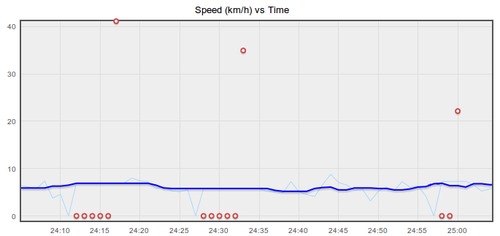

By experimenting with the Speed Filter I was able to find a set of filters that cleaned the log up remarkably, meaning I could include it in my training stats after all. The following graph and map show the discarded points and the resulting "cleaned" data:

The red points are discarded by a Radius Filter as that section of the route goes down a lane-way where the signal is generally very bad.

The green points are discarded by the Speed Filter and show how bad the signal was for the first 10 minutes.

The speed is smoothed with a 10 second Speed Median filter too, but the discard filters do the bulk of the work.

I had to experiment with the Speed Filter parameters by varying the cut-off speed until the log's distance was correct (based on previous logs along the same route). When the cut-off was too high, the resulting distance was still too long, and when it was too low, too many points were discarded and it was too short. Once the distance was right, the resulting average speeds were reasonable enough for me to be able to use them. As the table of before and after stats shows, the results were pretty dramatic:

This is the most successful filtering of a log I've seen so far, generally it's removing much smaller amounts of noise, and it's great to know it can be this powerful. I hope some other GPSLog Labs users can get similar results too as it's very frustrating when a log of your activity doesn't record well and is unusable.